Why are your performance results below the theoretical peak?

You will have to do the same calculations for your GPU if you plan on running HPL with your GPU. Considering the CPU running of HPL, we would have a theoretical peak performance: 8 cores * 3.50 GHz * 16 FLOPs/cycle = 448 GFLOPS

32 SP FLOPs/cycle: two 8-wide FMA (fused multiply-add) instructionsĭP stands for double-precision, and SP stands for single-precision. 16 DP FLOPs/cycle: two 4-wide FMA (fused multiply-add) instructions. There’s a Stackoverflow question listing the operations per cycle for a number of recent processor microarchitectures. Skylake is a name of a microarchitecture. After a little snooping on the page, I noticed a link stating Products formerly Skylake. Doing a Google search on Intel(R) Core(TM) i7-6700HQ CPU 2.60GHz, we find that the max frequency considering turbo is 3.5 GHz. For the operations per cycle, we need to dig deeper and search additional information about the architecture. From the model name, I see that I have a Intel(R) Core(TM) i7-6700HQ CPU 2.60GHz, which means the average frequency is 2.60GHz. cat /proc/cpuinfoĪt the bottom of the cpuinfo of my laptop, I see processor: 7, which means that we have 8 cores. First, we’ll look at the number of cores we have. You will come below the theoretical peak FLOPs/second, but the theoretical peak is a good number to compare your HPL results. With benchmarks like HPL, there is something called the theoretical peak FLOPs/s, which is denoted by: Number of cores * Average frequency * Operations per cycle When you run HPL, you will get a result with the number of FLOPs HPL took to complete. HPL is measured in FLOPs, which are floating point operations per second.

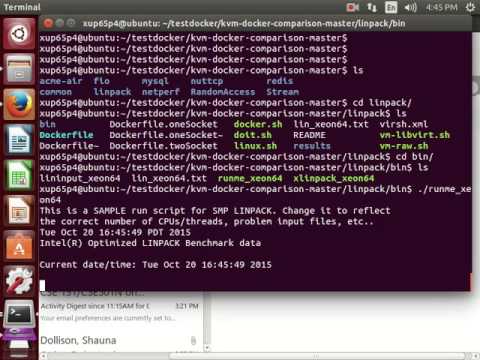

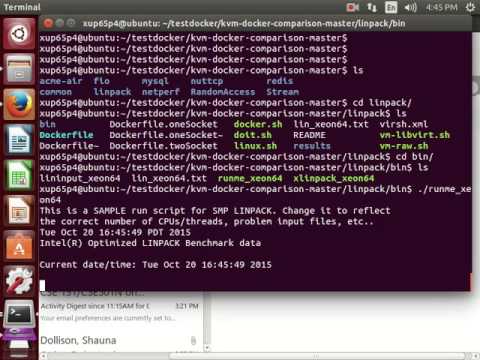

HPL measures the floating point execution rate for solving a system of linear equations. For more information, visit the HPL FAQs. Here’s what we did to improve performance across nodes, but before we get into performance, let’s answer the big questions about HPL. For some reason, we would get the same performance with 1 node compared to 6 nodes. At first, we had difficulty improving HPL performance across nodes. The specs are: 6 x86 nodes each with an Intel(R) Xeon (R) CPU 5140 2.33 GHz, 4 cores, and no accelerators. We’ve been working on a benchmark called HPL also known as High Performance LINPACK on our cluster.

0 kommentar(er)

0 kommentar(er)